Guide to Ephemeral Environments in Kubernetes

Ephemeral environments are gaining popularity among development teams who want to accelerate testing and streamline their continuous integration/continuous deployment (CI/CD) pipelines. If you are using Kubernetes then there are many ways to create ephemeral environments for fast releases and testing new features or changes. By creating isolated, ephemeral environments, you can test code changes, experiment with new features, and reproduce issues without affecting your production systems. Check out Scaling Quality: The Industry’s Shift Towards Ephemeral Environments in 2024 to discover how teams are using these environments to improve code quality and streamline microservices testing.

Today we will go through various methods for creating ephemeral environments in Kubernetes, including microservices in-a-box, namespaces, separate clusters, and shared clusters. We will discuss the pros and cons of each approach, along with different factors that will influence the right selection for your needs. Let’s start by understanding the first option, which is deploying all services in an ephemeral environment on a single powerful machine or a virtual machine.

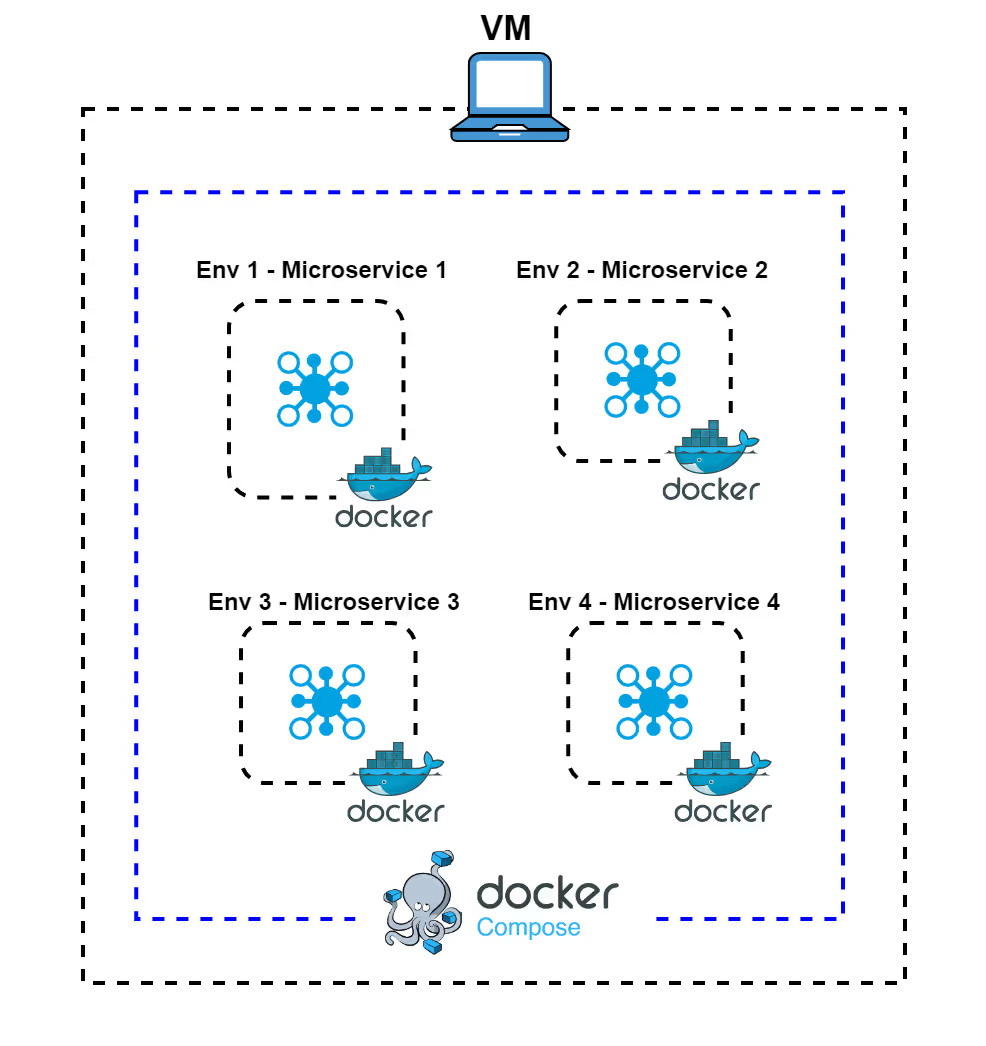

Option 1. Microservices In-a-Box (All-in-One Machine)

In this approach to creating ephemeral environments, all microservices are deployed together on a single, powerful machine or virtual machine (VM). Services are containerized and orchestrated using tools like Docker Compose or a single-node Kubernetes cluster. All the containers run side by side on one large EC2 instance with large CPU and memory resources. It is a relatively simple solution but has its disadvantages. Let’s go through the pros and cons of this approach:

- Advantages:

- Quick Setup: It is best for small teams or simpler applications where you can get all ephemeral environments up and running quickly without the complexity of managing multiple clusters.

- Simplified Development Environment: With all ephemeral environments running on the same machine, debugging and testing interactions between services is straightforward.

- Considerations:

- Limited Scalability: As all microservices are deployed on a single instance, it can be challenging to scale the environment horizontally. This makes it less suitable for larger applications with high traffic or resource demands.

- Resource Contention: If one microservice consumes too much CPU or memory, it might impact the performance of other services running on the same instance.

Cannot be a Production Replica: Running all services on a single node does not accurately mimic a distributed production setup, which can lead to unexpected issues when deploying to a multi-node production cluster.

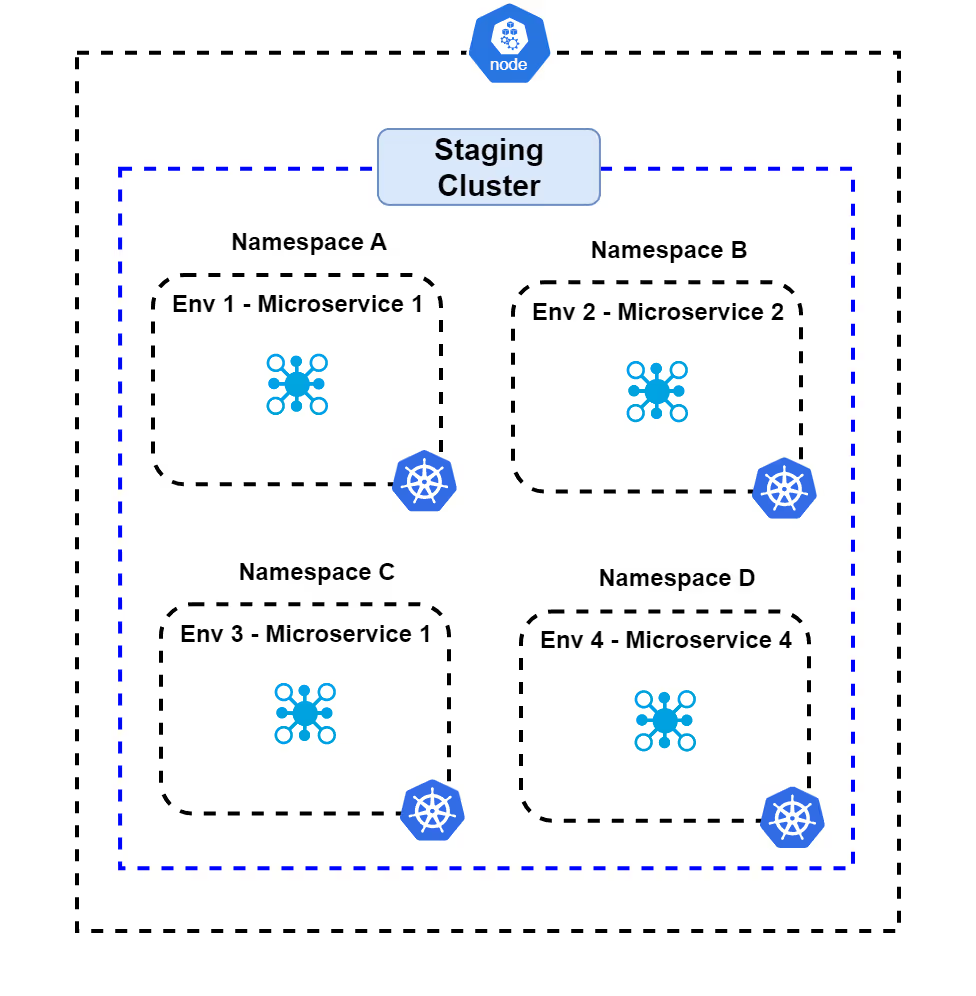

Option 2. Deploy in Namespace

With this approach, each ephemeral environment is isolated using Kubernetes namespaces within a shared cluster. This is a form of logical isolation where all ephemeral environments are in separate namespaces with each namespace having its own resource quota and limits.

- Advantages:

- Decent Isolation: Although part of the same cluster, namespaces still provide a decent layer of isolation between environments. You can manage resource quota and limits separately for each ephemeral environment in its own namespace.

- Centralized Management: As all the ephemeral environments are part of the same cluster, so management of the ephemeral environments is centralized.

- Considerations:

- Management Complexity: With a large number of namespaces, managing and maintaining them can become complex, especially if all you want to do is to test a small change in just one of the microservices. Note that you have to manage not just the setup and policies, quotas, but access controls for each ephemeral environment too.

- Resource Overhead: Each namespace can introduce additional resource overhead, especially if they have their own resource quotas and limits. This can lead to inefficient resource utilization.

- Performance Impact: A high number of namespaces can impact the performance of the Kubernetes control plane, as it has to manage and keep track of all the namespaces and their associated resources.

- Security Risks: The more the namespaces, the larger the attack surface, which increases the security risk.

- Synchronization Complexity: Keeping all the separate microservices copies up to date with the master can be challenging, especially as release velocity increases.

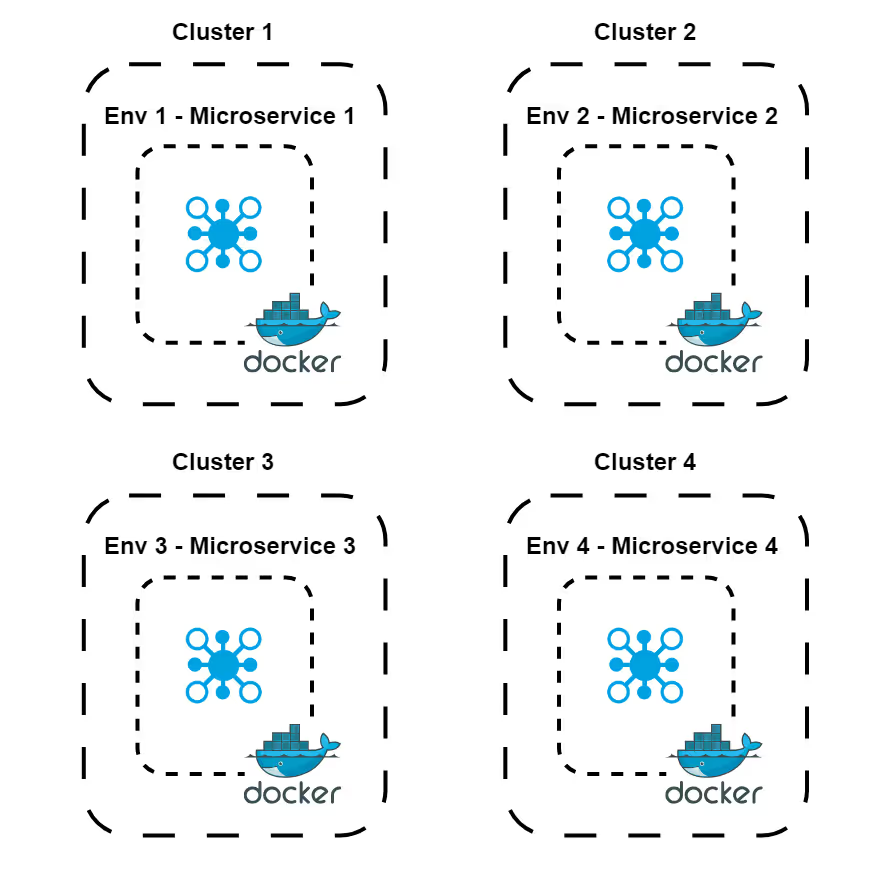

Option 3. Deploy in Separate Clusters

This approach involves deploying each ephemeral environment within its own Kubernetes cluster. As a result, you get ephemeral environments that are highly isolated and completely independent from each other. Here are some of the pros and cons of this strategy:

- Advantages:

- Total Isolation: Each cluster operates independently, so any issue in one environment, like crashes, will not affect others.

- Customizable Configurations: You can customize each cluster for specific testing needs, such as network policies or Kubernetes versions. With the individual customization for each cluster, teams can experiment and replicate production environments without affecting others.

- Considerations:

- High Resource Costs: Deploying each ephemeral environment in its own cluster requires substantial resources, driving up cloud costs. This is because each feature branch needs its own cluster requiring more CPU, memory, and storage.

- Added Complexity: Managing multiple clusters adds operational overhead. For each cluster, you will need to manage its own configuration, security policies, and maintenance. This might be an overkill for small or simple applications, e.g. a monolith MVP application.

- Longer Setup Times: The greater the number of clusters, the longer it will take to set up each one. This will potentially slow down the overall development time.

Synchronization Complexity: Each microservice in separate clusters needs to be kept synchronized with the master branch, which can be difficult as the number of services and release velocity increases. This becomes especially challenging as each microservice is deployed independently.

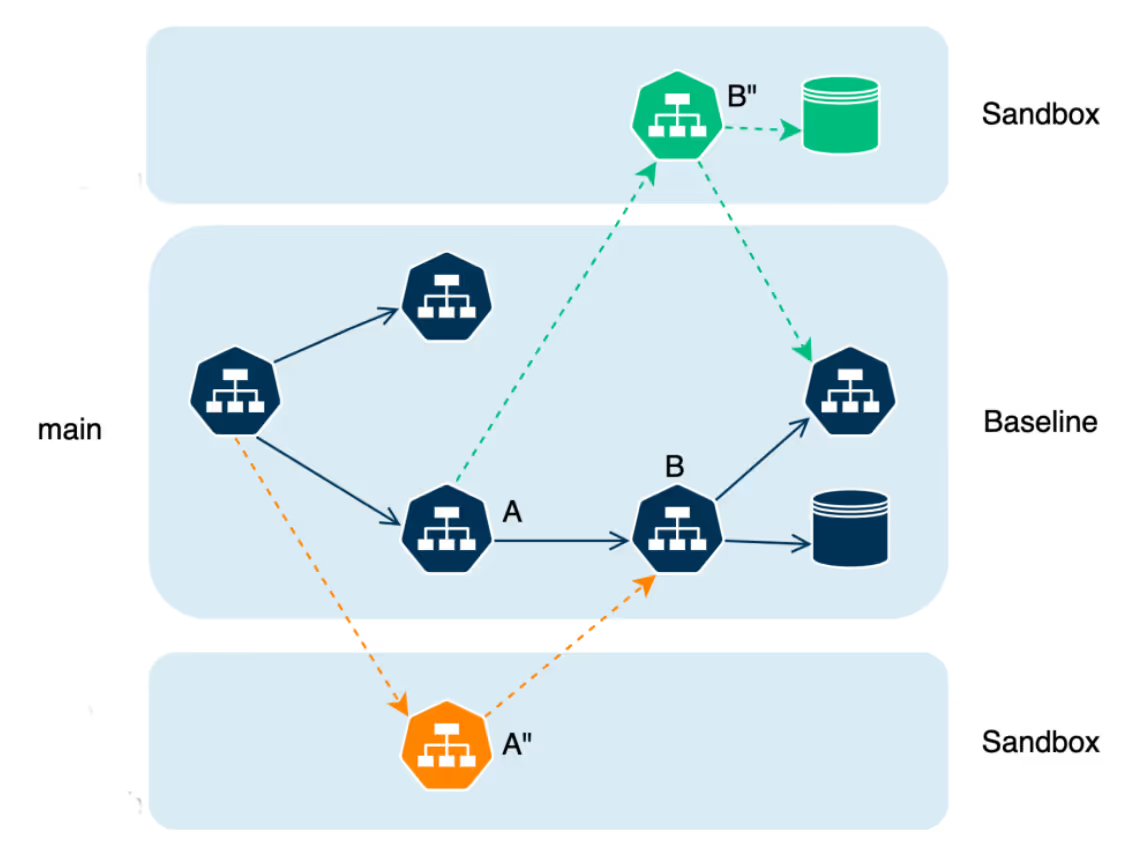

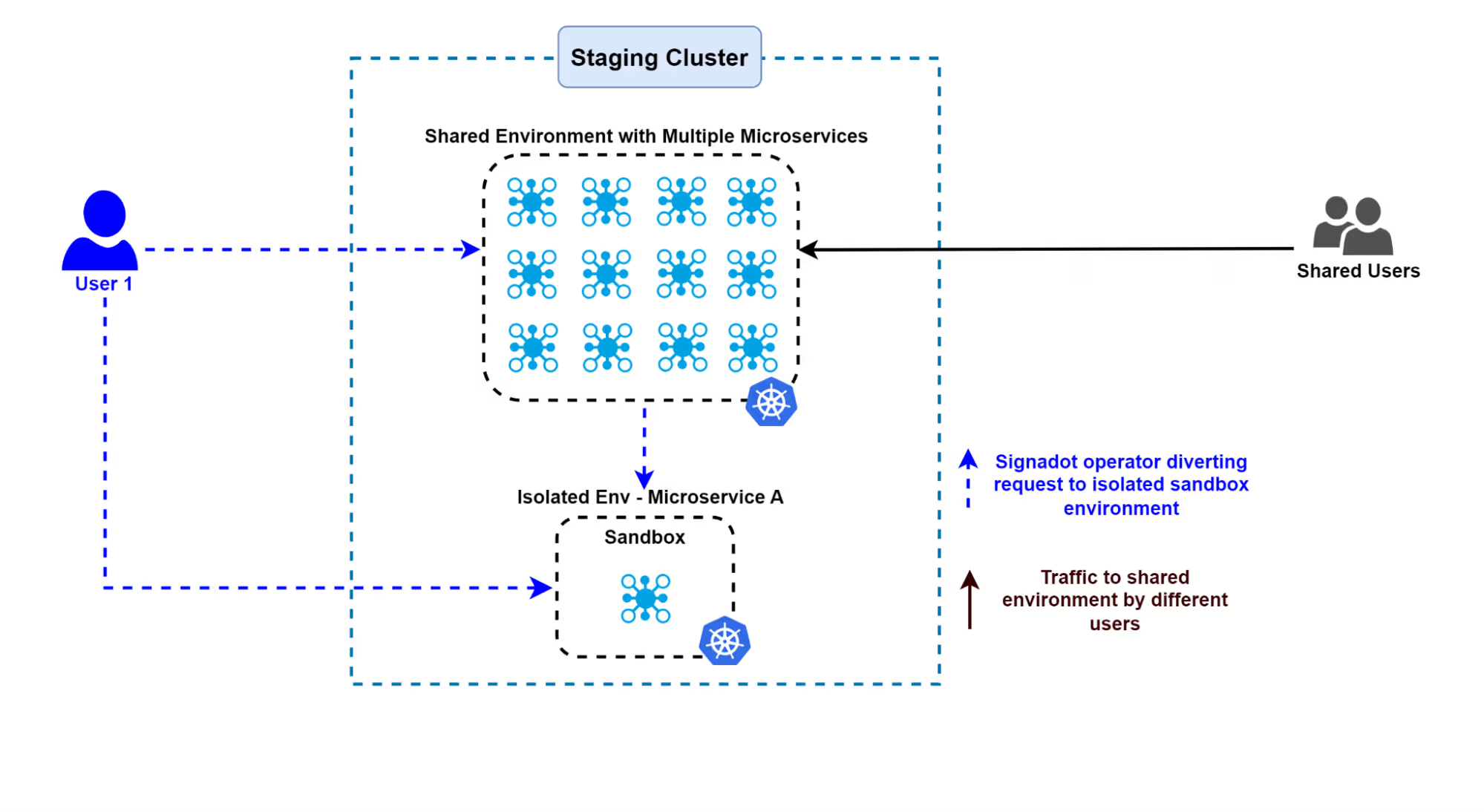

Option 4. Deploy in a Shared Cluster with Tunable Isolation

In this model, all ephemeral environments exist within a single shared cluster, where isolation levels can be adjusted to fit different needs, such as controlling how requests are routed to specific environments. This approach typically involves using a baseline environment, which acts as the core shared environment, and sandboxes that isolate changes from each code change. A sandbox is an ephemeral environment created on top of the baseline. It allows developers to test changes independently without disrupting the core environment. Below, we explore how this method ensures efficient resource usage and testing without interference:

This shared cluster can be used collaboratively by multiple developers and QA teams. It uses minimal resources and is ideal for situations where cost efficiency is a high priority.

- Advantages:

- Efficient Use of Resources: Optimizes existing resources, lowering the overall infrastructure cost.

- Encourages Team Collaboration: Teams can work in parallel within the same cluster instead of spinning up new ones.

- Centralized Operations: Easier to maintain and manage as all environments are within a single cluster.

- Considerations:

- Keeping the baseline environment stable: It is important to ensure that the baseline is well tested and stable, as instability can interfere with testing of other microservices. A strong CI/CD process with sufficient checks can help maintain stability.

- Need for a strong data isolation strategy: If deploying in a production environment, a robust data isolation strategy is necessary to avoid conflicts. However, in staging environments, the data isolation bar may not be as high.

Signadot – Best of Both Worlds

Signadot implements tunable isolation in a shared environment strategy and provides all the cost benefits of a shared staging cluster while still providing the required isolation by uniquely routing to isolated sandboxes. This allows you to deploy your bug-fixed microservice in its own ephemeral environment. As a result, you avoid the common issues associated with shared clusters, such as one service breaking the application being tested by another team. This approach ensures scalable microservices testing without compromising cost efficiency.

Factors Influencing Your Ephemeral Environment Strategy

Let’s go through some of the factors that will impact your decision on which option to adopt.

Branching Strategies

Trunk-Based Development

For teams practicing trunk-based development, where small and frequent commits get integrated straight into the main branch, the Microservices In-a-Box and Deploy in Shared Cluster models tend to be the most practical options:

- Microservices In-a-Box lets developers do quick and straightforward testing of incremental changes right on their local machines. As there is minimal effort required for the setup, you can achieve rapid feedback cycles.

- Deploy in a Shared Cluster is well-suited for scenarios that demand rapid deployments. This model supports quick provisioning and de-provisioning of environments within a shared cluster. That way, you can commit code frequently, and your builds will align with trunk-based workflows.

Feature Branches

When it comes to teams using feature branch workflows, where every feature gets its own branch, we have following choices:

- Deploy in Namespace strikes a balance by offering isolated environments for each feature branch, all within a single cluster. This approach helps maintain separation while keeping resources efficiently shared.

- Deploy in Separate Clusters is the go-to model if you prefer isolation over cost. It’s especially helpful for teams working on features that require strict separation due to compliance or security requirements.

- Deploy in Shared Cluster with Routegroups: While traditionally the shared cluster approach may not be ideal for feature branches due to isolation concerns, tools like routegroups (see Signadot Routegroups) enable routing between feature branches more efficiently. This makes it a viable third option that offers tunable isolation without requiring completely separate clusters.

Deployment Velocity

High Deployment Frequency

For teams with high deployment frequency, the Deploy in Shared Cluster model is often the best match:

- This option makes it easy to provision and remove environments quickly, which is vital for continuous integration and deployment pipelines.

- Shared resources and smart routing help minimize setup time, which is ideal for teams that need to deploy fast and frequently.

Moderate to Low Deployment Frequency

Teams with a more moderate deployment cadence might consider the Deploy in Namespace model:

- This approach provides a good balance between resource use and environment isolation, making it well-suited for regular (but not necessarily rapid) deployments.

- For lower deployment frequencies, Microservices In-a-Box may be sufficient for smaller applications, whereas Deploy in Separate Clusters works better if complete isolation is worth the cost.

Resource Efficiency

When optimizing resource usage is a key goal, Microservices In-a-Box and Deploy in a Shared Cluster offer the most efficient solutions depending on the number of microservices:

- Microservices In-a-Box minimizes resource consumption by running all services on a single machine, best for small-scale projects.

- Deploying in a Shared Cluster lets you run multiple environments within a single cluster. This maximizes resource efficiency as the number of environments grows. However, as the number of microservices increases, resource contention may occur and requires careful management to prevent performance bottlenecks.

Scalability and Growth

When more ephemeral environments are added, scalability becomes a key factor in determining which approach is best. Depending on how easily you can manage multiple copies of services and ensure efficient resource use, some methods will scale better than others. Let’s take a look at which strategies perform best in terms of scalability.

- Deploy in Separate Clusters: This option provides the best scalability since you can add more clusters without affecting existing ones. Each cluster operates independently, offering complete isolation. This allows teams to develop and test in parallel without resource contention. It also enables customized configurations per cluster to suit specific needs. However, this approach significantly increases complexity and cost due to the need for multiple copies of services across clusters.

- Deploy in a Shared Cluster with Tunable Isolation: This option is cost-efficient and can scale well with the addition of more microservices. It allows multiple ephemeral environments to coexist within a single cluster by adjusting isolation levels as needed. This maximizes resource utilization and simplifies management since all environments are centralized. Managing the baseline environment becomes crucial as more ephemeral environments are added and requires strong coordination to keep services synchronized.

Conclusion

Choosing the right ephemeral environment strategy in Kubernetes depends on factors such as scalability, isolation, cost efficiency, branching strategy, deployment frequency, and budget constraints. For teams prioritizing rapid deployment and cost-effectiveness, models like Microservices In-a-Box or Deploy in Shared Cluster are ideal. If your focus is on isolation due to security or compliance needs, Deploy in Namespace or Separate Clusters offers stronger separation at the cost of added complexity and resource usage. At the end of the day, it is your technical and business needs that will drive the selection of the most suitable model.

Join our 1000+ subscribers for the latest updates from Signadot